What if uploading your photos to the cloud made you and your family part of an AI training product?

Apparently, that's happening with an app called Ever.

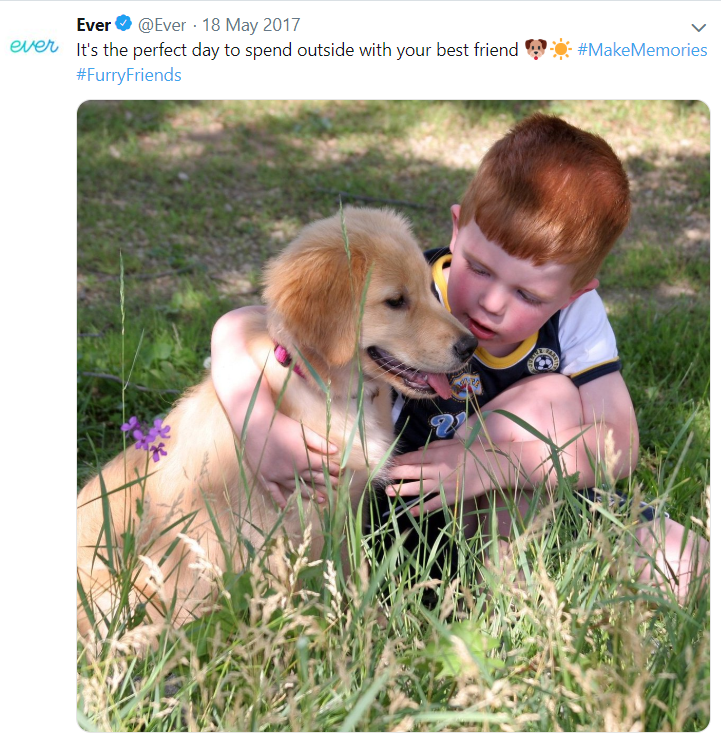

Its Twitter feed is full of heartwarming photos:

However, NBC News broke the story of how the Ever app is reportedly taking customer photos and using them to train Artificial Intelligence and resell it.

Millions of customers using it to store life's moments may now be part of the company's AI business model.

The practice was hidden until NBC came calling:

"What isn’t obvious on Ever’s website or app—except for a brief reference that was added to the privacy policy after NBC News reached out to the company in April—is that the photos people share are used to train the company’s facial recognition system, and that Ever then offers to sell that technology to private companies, law enforcement and the military.

In other words, what began in 2013 as another cloud storage app has pivoted toward a far more lucrative business known as Ever AI — without telling the app’s millions of users."

Serious privacy questions need to be answered by Ever

We asked SecureWorld speaker and podcaster Rebecca Herold, CEO of The Privacy Professor, about red flags she sees in this case.

This is an interesting situation, and certainly one that shows how personal information, in all forms (not just text, but also images, audio, video, etc.) will be used by some organizations if there are no perceived limitations (through laws, standards, or other legal requirements) preventing them from using that information, if it can result in benefit to the organization.

The Ever privacy policy (https://www.everalbum.com/privacy-policy) still does not include any explicit mention of using the app users’ photos, or any other information, within their own AI development activities. This is a huge omission (possibly by design?) and certainly does not support transparency to the app users for how their information will be used by Ever for development of their other products. There is a lone statement (two sentences) that they may have added to address this:

“Your Files may be used to help improve and train our products and these technologies. Some of these technologies may be used in our separate products and services for enterprise customers, including our enterprise face recognition offerings, but your Files and your personal information will not be.”

In Herold's opinion, this appears to indicate that Ever does not really view photos of you and your family as personal information, which is at odds with how most view privacy.

How will government regulators view this?

Companies update their privacy statements on a regular basis, and most of us know it. The new statement pops up and we're forced to either accept the terms or quit the service.

However, Herold raises an interesting question about what happened in this case:

It would be interesting to know how the FTC views this change of personal information use, after collecting millions of photos, that is different from what the privacy policy promised when the personal data was collected. Would this be a violation of the FTC Act, Section 5? If I was a user of the Ever app, or any of my family members are/were, I’d definitely be looking into that.

This kind of move by industry will likely fuel proponents of additional privacy regulations, and certainly will lead to more privacy discussions at regional SecureWorld conferences.

New waves of technology are going to change everything

The company's pivot toward's AI and the privacy implications that result are an example of how everything will be shifting in rapid fashion, requiring everyone to rethink their views and uses of Artificial Intelligence.

And other new technologies.

The Bald Futurist, Steve Brown, is speaking about this in his keynote at SecureWorld Atlanta May 29-30, 2019. Listen to him explain what we are up against with AI and the opportunities that exist:

[RELATED: 3 Important Facts About CCPA for Organizations]

[RELATED: When AI Turns Into a Psychopath]